.png) AR2-D2:

AR2-D2: Diligently gathered human demonstrations serve as the unsung heroes empowering the progression of robot learning. Today, demonstrations are collected by training people to use specialized controllers, which (tele-)operate robots to manipulate a small number of objects.

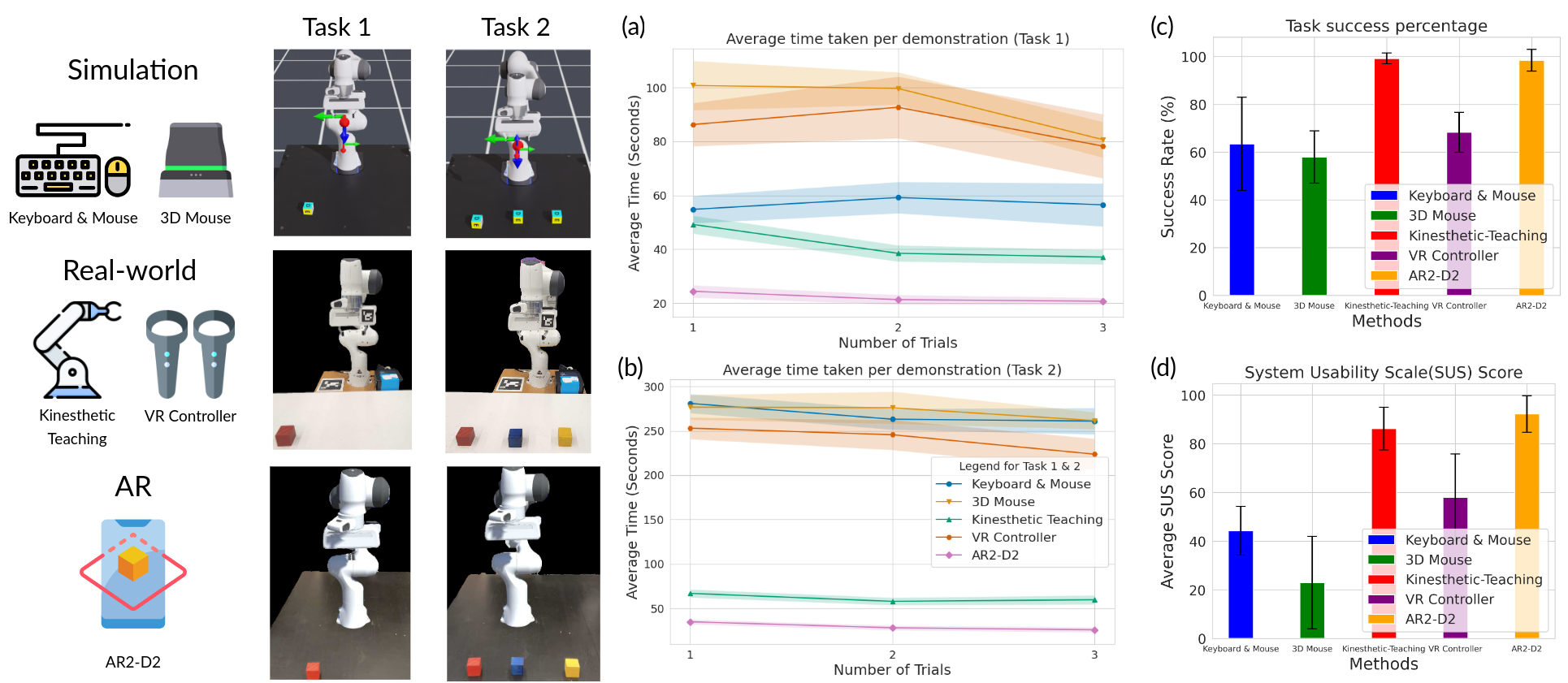

By contrast, we introduce AR2-D2: a system for collecting demonstrations which (1) does not require people with specialized training, (2) does not require any real robots during data collection, and therefore, (3) enables manipulation of diverse objects with a real robot. AR2-D2 is a framework in the form of an iOS app that people can use to record a video of themselves manipulating any object whilst simultaneously capturing the essential data modalities for training a real robot. We show that data collected via our system enables the training of behavior cloning agents in manipulating real objects. Our experiments further show that training with our AR data is as effective as training with real-world robot demonstrations.

Moreover, our user study indicates that users find AR2-D2 intuitive to use and require no training in contrast to four other frequently employed methods for collecting robot demonstrations.

We designed AR2-D2 as an iOS application, which can be run on an iPhone or iPad. Since modern mobile devices and tablets come equipped with in-built LiDAR, we can effectively place AR robots into the real world. The phone application is developed atop the Unity Engine and the AR Foundation kit. The application receives camera sensor data, including camera intrinsic and extrinsic values, and depth directly from the mobile device's built-in functionality. The AR Foundation kit enables the projection of the Franka Panda robot arm's URDF into the physical space. To determine user's 2D hand pose, we utilize Apple's human pose detection algorithm. This, together with the depth map is used to reconstruct the 3D pose of the human hand. By continuously tracking the hand pose at a rate of 30 frames per second, we can mimic the pose with the AR robot's end-effector.

Given language instructions describing a task (e.g.~``Pick up the plastic bowl''), we hire users to generate demonstrations using AR2-D2. From the user-generated demonstration video, we generate training data that can be used to train and deploy on a real robot. To create useful training data, we convert this video into one where it looks like an AR robot manipulated the object. To do so, we segment out and eliminate the human hand using Segment-Anything. We fill in the gap left behind by the missing hand with a video in-painting technique known as E2FGVI. Finally, we produce a video with the AR robot arm moving to the key-points identified by the user's hand. This final video processed video makes it appear as if an AR robot arm manipulated the real-world object; it can used as training data for visual-based imitation learning. Additionally, since we have access to the scene's depth estimation, we can also generate a 3D voxelized representation of the scene and use it to train agents like Perceiver-Actor.

We conducted a user-study with (N=10) subjects and found out that AR2-D2 are significantly faster than 4 other conventional interfaces of collecting robot demonstrations for both in simulation and real-world.

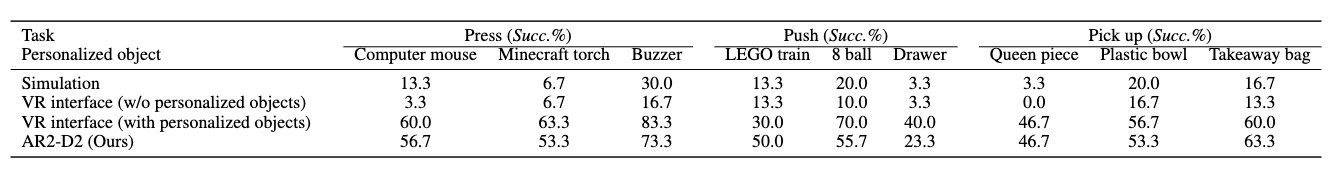

We also compared the data collected by AR2-D2 with a few other baseline approaches, and found that demonstrations collected via AR2-D2 yields useful representation for training a real-robot and also demonstrations collected via AR2-D2 train policies as accurately as demonstrations collect from a real robot.

AR2-D2 demonstrates its capability to capture more complex task with longer horizon (more keypoints).

We perform a diagnostic analyze on the number of demonstrations and iterations require to ground the pre-trained AR data to the real robot deployment environment. We found that 5 Demos and 3,000 Iterations (equivalent to 10 minutes of training) yields the optimal result.

AR2-D2 demonstrations store 2D image and 3D depth data, facilitating training of image-based behavior cloning (Image-BC) and 3D voxelized methods. With fixed camera calibration offset and no fine-tuning during training, 3D-input agents outperform 2D counterparts. We evaluated their performance on three tasks.

AR2-D2 has limitation in capturing the physical interaction fidelity. Given that we can't replicate the exact force exerted by a real robot with our hands. However, for the majority of objects used in quasi-static manipulation tasks, the physical property differences are not so significant as to impede the transfer of physical interactions from human hand manipulation to real robot manipulation. Prior works in training policies from RGB to action (R3M, RT1, SayCan, and PerAct) have also effectively demonstrated this.

@article{duan2023ar2,

title = {AR2-D2: Training a Robot Without a Robot},

author = {Duan, Jiafei and Wang, Yi Ru and Shridhar, Mohit and Fox, Dieter and Krishna, Ranjay},

booktitle = {arXiv preprint arXiv:2306.13818},

year = {2023},

}